One of the most asked questions about FileMaker is how to deal with damaged files. There is a glut of choices when attacking this problem and there doesn’t seem to be one single answer. Compact, Optimize or Recover… Maybe `Save as Clone` which one works and why? Hopefully, this will answer a lot of your questions.

As I mentioned in my FileMaker DevCon: Day 2 article, Jon Thatcher, FileMaker Server guru, gave an excellent presentation on exactly what you should do when a file is damaged. He also gave some very in-depth explanations of what exactly each different option actually does “Under The Hood”. I’ve broken a lot of this down for easy consumption but almost all of this info comes from his DevCon Session.

##What causes a damaged FileMaker file?##

The most common way that a FileMaker file can become damaged is FileMaker Server unexpectedly closing on the server computer. This can be for any number of reasons. Perhaps the power went out and for some crazy reason you didn’t put your FileMaker Server on a UPS (that was **very** not smart). Maybe one of those fantastic Windows Server programs decided your FileMaker Server was a virus and shut it down. My favorite is when a the night crew comes in and plugs their vacuum in and blows a circuit in your ups causing your FileMaker Server to lose power. Seriously, that has happened to me. Whenever your FileMaker Server closes unexpectedly wether it be the application or the whole computer there has likely been some damage to the file.

> Note: If you are searching in a field and you are getting strange results or for some reason when you go to a layout a script hangs. It may be something as simple as a bad index. To fix this problem just turn off indexing on the field and then turn it on again (you have to close and re-open the Manage Database window between changes). This may take a while so make sure you are doing this after hours.

FileMaker files have many more opportunities for damage when they are not hosted on a FileMaker server. I won’t even get into these because things as innocuous as network latency (Extreme cases but you get my drift) may even cause damage to your shared file so *don’t do it*. Buy FileMaker Server instead.

##FileMaker Server 9 Consistency Check##

The most common way people find out that there is a problem with their file is they fire it up in FileMaker Server 9 and it *won’t open*. FileMaker 9 tells you that the *file is damaged and must be recovered*. This is definitely the point of much consternation because many people have taken these files, which they thought were perfectly fine, directly off their FileMaker Server 8 install and moved them over.

What these people are experiencing is FileMaker 9 Server’s consistency check. The consistency check performs some cursory inspection on the file to check for common types of corruption. Specifically, it does the following:

1. Asserts that block structure is intact – 4K Blocks

1. Verifies that the next and previous blocks are there

When FileMaker Server 9 detects any problems during it’s inspection it will suggest to use recover on the file. This actually isn’t entirely necessary but we will go over your other options later.

> Note: The consistency check does not actually verify the data stored within the fields in your file. So you may still have problems with the information in your file itself (ie. corrupt images in container fields)

##FileMaker Server 9 Backups##

FileMaker Server 9 comes with a nice little addition that may seem frivolous at first but it is actually a great tool for proactively fighting corruption. As previously stated, there are many ways for files to become corrupted and the only real way to detect if there is corruption is to run a consistency check on the file. Opening and Closing the file definitely sounds a little tedious to me and that is where the backup consistency check comes in.

If you check the `perform consistency check` box in your backup schedule, whenever your backup runs the file created will be run through the same consistency check that occurs when a file is opened in server. Here are a couple of tips on the use of the backup consistency check:

1. occurs after hosted files have been resumed

1. should be done on important files that change often

1. slower than the backup process itself (but who cares it’s being done on the backup)

The primary value of this check isn’t to be sure the backup worked. Rather, it is to check your file periodically (like every night, or a few times a day) to make sure it hasn’t become corrupted. Since the backup is essentially a copy of the live file, if it is damaged, you might have problems with the live file that need your attention, and now FileMaker will tell you.

##How often should I back Up?##

This is one of those points in life where less is *definitely* not more. Backup early and often would be a much better cliche. The best thing to do would be to set up a backup structure like so:

* Once Daily with consistency check

* Hourly Odd

* Hourly Even

If you have the hourly odd and even setup and you are forced to go to backup, you will be able to recover almost all of your lost data without much of a hassle. Having a backup scheduled for each day (ie. Sun, Mon, Tue …) allows you to go back to a previous day if you find your hourly backups damaged as well. If you have space, I would even expand the back up structure to include a backup every 3-4 hours on each day (ie. Mon-4am, Mon-8am, Mon-noon). It is definitely a hassle to setup all these different backup folders and schedules but it saves a lot of time and important data when something goes wrong.

> Hint: Take advantage of FileMaker Server 9’s new feature that sends email when error’s are encountered. This may allow you to catch corruption before it gets too pervasive and also can alert you if the backup fails because you’re out of space, a disk is unavailable, or for any other reason.

##Dealing with FileMaker Corruption##

Now that we have gone through all the boring stuff, how do we actually approach a corrupted file? There are many different options. The `Tools -> File Maintenance` Menu gives us `Optimize` and `Compress`. There is `File -> Recover` and there are also a couple interesting options in the `File -> Save a Copy As` menu. Which one is right? Well before we answer that question, we should probably go over each option first. I will try to go over all of them as briefly as possible and save all the info about which process to use until the end.

> Note: Don’t care about the options and just want to know what to do… [Jump to the Nitty-Gritty](#nitty-gritty)

###Save a Copy As -> Clone###

This command is a very useful but not really for fixing corruption. It gives you an exact duplicate of your file’s structure without all the data. You get all your tables, fields, scripts and other important stuff without any records to distract from it’s beauty. Another interesting feature of this function is that it deletes all the locale info from the file. The locale info is what dictates how region specific data such as dates and times are displayed in FileMaker.

The locale info is set on a FileMaker file when it is opened for the first time. The same goes for this clone file you have created. Since the clone is basically region agnostic until it is opened, it is ideal when you want to send a copy of your system to somewhere like France where the dates and default language is different.

###Save a Copy As -> Copy###

Yup, this is as boring as you thought. This function creates a block for block copy of your file just like the FileMaker Server backup feature does. It terms of corruption problems: useless.

###Save a Copy As -> Compacted###

Here is your workhorse. The `Save a Copy As -> Compacted` command is going to be the most useful command when your attempting to keep your file clean or remove most corruption. This command actually employs the `Compact` and `Optimize` process that you find in `File Maintenance` menu but it is actually occurring on the copy of the file not the actual file itself. This protects your original data and provides you with FileMaker’s best attempt at a cleaner copy of the data as well. Seeing how this function makes use of the `File Maintenance` menu commands, I should probably explain them.

###File Maintenance -> Compact###

Yea the name pretty much says it all here. FileMaker removes the space between blocks and combines blocks to create a smaller file.

###File Maintenance -> Optimize###

Organizes the blocks of data so that they are in sequential order. The result of this is that the content is now organized in table and record creation order.

> Note: There is limited value to this because we typically operate on data based on a found set which can have many records not necessarily in creation order.

###The Recovery Command###

This guy is in a different league then the other commands because it’s the only one that will actually drop data from the file. FileMaker recovery entails scanning *all* the blocks in the file and validating them. Any invalid blocks are skipped and left out of the file when it’s recovered. A valid block has the following properties:

1. both the next and previous blocks are in the file(each block contains data on the next/prev block)

1. internally consistent (ie. has the required length of 4K, in correct order)

1. is not a duplicate of a preceding block

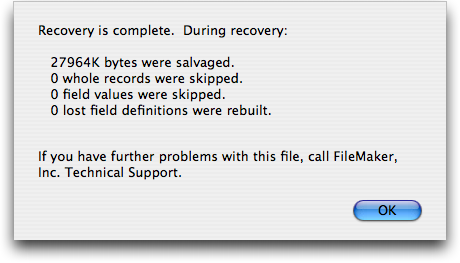

Along with validating the blocks, the recovery process rebuilds the internal id’s of the records and repairs the catalogue. In any extreme cases, fields that are lost due to corruption can actually be recovered. Ideally, you won’t lose any missing data, your file will be completely salvaged and you’ll see this reassuring dialog:

> Note: Restored fields default to type `Text` unless they are used by a summary field in which case they are `Number`

Don’t. I know, after all that reading you are probably pretty pissed with that answer. Seriously though, when a file is damaged I would strongly recommend going to more recent clean backup and going from there. A damaged file is never 100% clean.

FileMaker provides you with some great tools to attack the problem but there is no definite answer as to wether the file is completely fixed or if there is some malicious corruption deep within the file that cannot be detected. As a general rule, damaged files should not be returned to production if you have a viable backup to replace it. This is definitely the ideal case but not necessarily the reality of the situation for everyone out there.

##How to Fix a Damaged File (For Real)##

So you’ve determined that there is no possible way for you to go to backup. Once you have made that decision your path is actually quite simple. First, you need to use your `File -> Save a Copy As` command and choose `Compacted`. Then, only if the Compacted copy option fails, use the `File -> Recover` command. *Never* use `File Maintenance -> Compact` or `Optimize`.

The End

Just joking. After those statements I should probably add some type of justification to my thoughts (omitted: stolen from Jon Thatcher’s session). I’ll start with my last assertion: *Never* use `File Maintenance -> Compact` or `Optimize`. The reason for this is that the operations are actually being performed on your production file. Not a copy, the *real* thing. I believe the term for this is an in-place operation. Therefore, you are actually working on the file that you have open in your FileMaker Pro Client. This is not the ideal way to deal with removing Corruption.

> Note: Jon Thatcher actually said *never* use the File Maintenance menu in his session so I’m not exaggerating for effect I promise

If you use `File -> Save a Copy As` command and choose `Compacted`, you are performing the same functions as you would with your File Maintenance commands but you are actually performing the action on a *Copy* of your damaged file. Your original file is not modified at all by this command. Also, as a fringe benefit you are operating on a copy in a whole different sector of the disk which removes the possibility of a damaged hard drive being the actual source of your problem.

You may have already guessed the reason for only using `File -> Recover` as a last resort. I mentioned the fact that invalid blocks are left out of the file when recovered. To put this more succinctly: the `Recover` process can cause the lose of records. Actually, not only records but data within records (ie. Field Data). So if data integrity is your goal, which it more then likely is, I would only use this process if absolutely necessary.

Thanks, jesse- nice try, but the pig with lipstick is still a pig. I saw this lecture at Devcon, and have to say that the info presented was as bad as the Recovery dialog box that we have seen for the past 13 years.

Bottom line- if your file needs recovery, you might/might not be screwed, and FMI gives you no tools to make a decision as to whether you are or aren’t. “XXXXXXk bytes were salvaged” is useless. Bytes?? In 2007?? Are you kidding??

We have multi-table FM files now that are gigabytes, routinely. What I hear from FMI is that I should rebuild those files every time there is a hiccup, or I am treading on shaky ground.

Shame on FMI that the “recovery tools” are so primitive and obtuse.

How about this- an interface that looks like it was done post 2000? A toolset that includes DETAILED info on what might be wrong with the file? Or better yet, a CHECKLIST of items to troubleshoot after a corruption occurs?

Some very useful tips there thanks 🙂

I’ll be sure to remember to use Save As Compacted in the future if I ever need it!

@ddeguz: I feel your pain. When you have corruption, it can really hurt. I think the real heart of the problem is the monolithic file concept.

Every database system on earth has file corruption problems. They’re simply unavoidable when you have very large files being constantly updated all the time. When I worked at Micron, we used Sybase very heavily (we had something like 100+ production Sybase servers, and some tables had hundreds of millions of rows). And I know the DBAs were dealing with one corrupted database or another often. Of course at that level you also have the option to buy very high level support, so they had regular visits from Sybase engineers who could crack open the files and help hunt things down.

But the real advantage to Sybase is that the database is completely reproducible. It is just data, and the entire schema is encoded in SQL, which is sort of a source code for the schema. So if the database files get corrupted, you can easily create new “clones” that are guaranteed corruption free. You then only have to worry about getting the data out of the damaged files, cleaned up, and restored. And your application (UI, scripts, whatever) is all elsewhere, again in code, and unaffected by the corruption, so it is not a concern. You certainly don’t have to rebuild it when you data gets hosed.

If you deal with very large files, I strongly recommend you move to the data separation model. In that environment, your data file(s) can be easier to work with. In particular, in the worst case, you can re-create a file with just tables, fields, and relationships, and then wire it back in to the system, which is a lot easier than recovering layouts, scripts, and so forth. Even better, it is much easier to keep a golden copy of your data file to restore to whenever a problem occurs because it changes much less often than the interface file.

Thanks for the feedback.

Geoff- good comments, and I agree completely. My point (which on re-read was too sarcastic, sorry about that) is that huge strides have been made in FM 9- file size, speed, features, etc- but our recovery tools and options have remained almost unchanged since FM 3. I figured after the big re-write from FM 6 that there would be some attention paid to this, and I thought it ESPECIALLY odd that the main tech lead at FileMaker for FM Server specifically counseled against one of the “improvements” in File Utilities that we do have. As Jesse writes above “Note: Jon Thatcher actually said never use the File Maintenance menu in his session so I’m not exaggerating for effect I promise”.

What does that mean for developers, if there is a potentially damaging tool that sits so innocuously in the menu? Of course, buyer beware, but I think it underscores my point- the recovery/maintenance of FileMaker files has not kept up with the huge advances in the application. Maybe there are under the hood tweaks that we can’t see, but from a user feedback standpoint we are still years behind.

I think all of us have had that moment where we have a file that crashed and we open it, run a consistency check (or a recovery) and then we have to make that ugly decision as to whether or not the file is OK. Mostly guessing, I bet.

Could FileMaker build in a “back up replace tool” that would migrate data from a crashed file to an identical (schema, not data) backup, so that if you made no schema changes to your file it would only move the “changed/new data”?

Anyway, this was a good article that deals with the hand we are currently dealt, but I think we should all be pressing for a more robust recover/backup toolset that befits this fantastic application.

@ddeguz: I couldn’t agree more 🙂 I didn’t make it to this session, but as just one example, I have used the File Maintenance tools routinely. If the advice from the engineering side is “Don’t use it” then why is it there?

Jon’s larger point, I think is that it “works” but why risk it on the live file. If you crash, for instance, it could whack the file. Using the Save A Copy As method, if something goes wrong, you still have the untouched original. And if the file is in an unknown state, it is especially important to not “mess with it” until you’re sure you’ve at least gotten your data out.

Thanks for the great insights. I’m with you 100% 🙂

Thanks for this breakdown. I was in Jon’s session and used the “Save as Compacted” this week to fix some corruption in a record. I still don’t know if that’s better, worse or the same as going with Recovery.

The piece I continue to struggle with is the difference between some corruption of an element (script, layout, value list) vs. file corruption that happens after a server crash when you can’t open the file at all. I think they are very different things that should be addressed separately and I don’t think Jon did that in his session.

The most pressing point is that if a server crashes and I can’t reopen the file, I know when it happened and I know I can go to last night’s backup. With element corruption, it could be weeks or months before coming across that corruption. Even if I knew when it happened, there’s no way I could afford to throw away months of development.

I agree 100% with the previous posts. A detailed file damage procedure should be top priority as we build bigger and bigger solutions and try to gain confidence with IT departments.

Thanks again for the great summary!

@jmundok – As far as your confusion between catalogue(your element) versus file corruption, I really don’t think there is a difference. If your file doesn’t open your catalogue (Where all the scripts, layouts, fields and other FileMaker goodness is stored) has probably been severely damaged. While if you can still open the file, you have less damage to the catalogue. Either way, like you said, you are in trouble. It’s very difficult to detect what’s wrong with the file and unfortunately like you mentioned you never know when it will finally fail.

As far as the difference between the Recover process and the Save as Compacted, I believe it helps to think of the Recover process as invasive surgery. It’s actually looking through the catalogue and ferreting out inconsistencies and also examining the validity of all the data blocks. It will remove any blocks it finds fishy in the data and repair whatever it can in the catalogue. It’s definitely tough to see exactly what it repaired but like you said…. maybe next release.

The Save as Compacted is really just creating a block for block copy of the file then compressing and optimizing it. It’s not actually repairing anything per-say but in doing so it makes your file as small and quick as possible.

At least that’s what I took away from the whole session… Hopefully, that will shed some more light on the subject. Maybe we’ll get lucky and Jon will read this and bring out the heavy hitters.

As a side note, he did give some great tips on how to import data into your backups that are helpful but it’s definitely difficult with larger solutions though.

The biggest problem is that we are used to the ease and simplicity of creating a FileMaker file. It’s very straightforward and streamlined. Unfortunately, when you are dealing with FileMaker file corruption it’s just not that transparent.

BTW- here’s something I have been doing with my files these days, as part of ongoing development.

Assume a crash- you now have a problem file and there has been new data since the last backup.

I have a script that exports all records from all tables, and puts them in separate FM files in a folder so that I can import fresh into a clone, or look at the modification timestamps so that I reimport only the new/changed records.

Simplified, like this:

Export Data Script

Go to Layout [ “Layout_A†(Table_A) ]

Show All Records

Export Records [ File Name: “file:Table_A.fp7†]

Go to Layout [ “Layout_B†(Table_B) ]

Show All Records

Export Records [ File Name: “file:Table_B.fp7†]

Go to Layout [ “Layout_C†(Table_C) ]

Show All Records

Export Records [ File Name: “file:Table_C.fp7†]

When the Export Records dialog comes up you have to pull down the Current Table choice, but generally this is easier than doing it manually. Any time I add a table I update the script, so when a crash occurs I’m at least sort of ready to deal with all the “Is FileMaker back up???” from the users.

Also, I use a specific naming convention to call out the fields that have autogenerated serial numbers, so that I make sure to reset those in the new file. I know you can script that, but I got burned once and prefer to see it with my own eyes.

I just wish this was easier, since I’m only human and the machine could just do this a lot better than I could. Anybody else have any ERTs (Emergency Recovery Tips)?

First, a quick one. One time we had a file that would not recover and no recent back up. I was commanding events remotely and suggested the client tried to recover on Windows as all had been happening on Macs. It opened and we got it back.

Second, in response to the data separation suggestion, is to go halfway and do regular back ups of just the data via export. To do this go to Define Fields (as it was) and view by field type. Scroll down to the last field before the calculations and note it. Then come out of there and do an export of all the data fields. You can go further and not export the global fields.

This can be a lot of work. I used to automate it with the old OneClick but this became impossible with FM7 and after.

FM, the company, should provide an ‘Export Data fields’ command. Is the fact that they don’t a sign that they are still afraid of making migration to SQL databases easy?

@all: File corruption is a challenging topic. It helps to think about this reality: At the end of the day, a FileMaker file is a long string of bits. It may be billions of them, but it is essentially a huge binary number. It is in no way “structured” any more than a plain text file at the lowest level. FileMaker Pro itself writes those bits according to its own internal standard so that they can get the combination of features and performance they want. So they take care to put just the right bits in just the right place so that later, they can find, ie, that email address in the middle of all those bits.

But the file is really and truly just a giant string of bits.

Now images one of those bits gets flipped. Maybe your hard drive failed in an undetectable way. Or perhaps FileMaker had a bug. Or your computer crashed while the disk cache was flushing. Or your RAM is defective, and a flipped bit in RAM got written to the disk.

Now you have 2 billion correct bits and one incorrect bit. The exact impact if this flipped bit could be:

1: A person’s email address in one field changes from bill@whatever.com to cill@whatever.com

2: A field definition is changed in some subtle and unpredictable way.

3: One block in FileMaker’s internal file structure no longer properly points to the next block, resulting in lost data, or schema.

And on and on. There is no difference at the heart between corrupted data or corrupted schema. FileMaker has to detect that one bogus bit among billions. But is many cases, the new “corrupted” state is perfectly valid. It’s still conforming to the rules of FileMaker’s file structure. It’s just changed subtly.

Corruption is impossible to really “detect” or “fix.” Instead, you look for the symptoms of corruption and try to eradicate them. FileMaker’s tools do this in ways I don’t understand, and they could certainly be better.

But I think any idea that FMI could just “fix” the “corruption” if they just put time into it is false. It is a computer science problem that isn’t really fixable.

If you want to avoid corruption, you just have to follow the procedures. Never use a recovered file. Never use a crashed file. Always go back to backups.

To the point above about hidden corruption: you’re totally right. That’s the real problem. And to avoid that, your best bet is (and I stand by this 100%) always use the best hardware possible. Use a real server class computer, use expensive RAM, use high-quality disks and a high-quality RAID system. Use a good UPS. These things make all the difference.

Sorry to ramble 🙂

Changing from Filemaker 8.5 to 9 can have some frustrating results. When you open a file in FMP 9 you may get the dreaded “this file is damaged use recovery” (paraphrased). When you try to recover the file you get the even more dreaded “this file is

corrupted” (paraphrased).

Do not recover the file. Open the file in your previous version (8.0, 8.5). Save a copy – compacted, and then try to open the file in FMP 9.

If this doesn’t work. Go back to 8.0/8.5 and create a new file then import all of the tables in the so-called damaged file. Now you can import all of the data. You’ll need to import the scripts and redesign the layouts. This seems like a lot, but you’ll still have your data.

In the words of Comic Book Guy – most informative blog entry ever.

Jesse – reading your comments about the vacuum cleaner still makes me cringe. I honestly wake up at night screaming ‘Not the orange outlets!!’

Thanks for the continued clarification on this entry. I appreciate it and feel that I have a much better understanding of corruption…still hate it, but at least know it a bit better!

@Drew – I’m pretty sure you had to spend about 24 hours recovering files too. Those were the days.

@drew: 🙂 Just for the record, Drew Grgich is the Drew I’m referring to when I say “Everybody should have a Drew.” Which is something I say a lot. He’s a rare breed: Extremely competent Windows administrator *and* cool guy. I’m glad you liked the article. Jesse’s occasionally good for something.

The main questions remains unanswered: Is this file damaged?

Or more important: Are you sure the file is NOT damaged?

See website for an answer. I’ll also comment on some details of this blog later.

Winfried

@all: Winfried’s article is well worth the read (I’ve read it many times). Just in case it wasn’t obvious, here’s the direct link:

How to Cope with File Corruption

Thanks, Winfried. Excellent stuff. I haven’t had the chance to use FMDiff for this purpose, but I’m sure I will some day.

Find problems: damaged index

The problem with turning indexing off and on may be:

a dependent calculation becomes unstored as well and further down the road, Value Lists, Relations, or other things stop working. Indexing of depending fields may never be turned on again. So be careful with that!

FileMaker Server 9 and FileMaker Pro 9 Consistency Check

What it does, and what it NOT does

It tests if the blocks are linked correctly to the previous and next one

It tests whether the internal table of contents of IDs point to the right blocks

It does NOT assure the file is 100 % OK! Claiming so is plain wrong.

It NEITHER does check the consistency of the data NOR the so-called file structure (what makes up Tables, Fields, Scripts, Layouts, etc.)

FileMaker Server 9 Backups

The new perform consistency check box in your backup schedule

It simply runs the same tests as described above.

It does NOT assure the file is 100 % OK! Claiming so is plain wrong.

It NEITHER does check the consistency of the data NOR the so-called file structure (what makes up Tables, Fields, Scripts, Layouts, etc.)

Admitted it’s better than nothing and sure catches some common problems, but you may NOT rely on these tests for 100 % corruption free files.

Save a Copy As -> Compacted

Does it really remove corruption? Not really, and at occasions it makes things even worse.

This may become clear if we know what it really does:

It reads the file in its logical block sequence. It tests how much of the block is filled and if possible fills it with part of the stuff from the next block. Then that information is written to the new file.

If per some damage the block length of the previous block was not set correctly, then either a gap with random data appears, or part of the old data is overwritten. This can cause severe problems and crashes.

If there is already some damage within the block, this may be copied without further ado.

Comment on comment 10. by Geoff Coffey, 2007-08-17

You all may not be aware that help is on its way: FMDiff tells you that a file is damaged if it is – and where.

Read How to Cope with File Corruption

@winfried: First, thanks for the clarification. We never intended to say that the consistency check ensures a perfect file, and I have cleaned up some “casual” language that went too far. The key is that with Server 8, you could have damaged files that keep running for weeks. Now, in 9, you have a better chance of finding out early if the backup-time consistency check can catch it, so we recommend turning this on in most cases, at least for a once-nightly backup.

As for turning off indexing, I’m not aware of any case where removing an index causes a dependent calculation field to become unstored. If that can happen, it would certainly cause problems that are hard to track down later. But FileMaker is happy to store a calculation based on an unindexed field. As far as I understand it, this is only a concern if you make another calc field unstored. Are you sure removing an index can lead to this situation? What are the circumstances?

Again, thanks for the feedback and good info.

In his session, Jon Thatcher solicited our ideas about how the report after recovery could be improved. I’d take this offer seriously. For example, we could request a result that went into detail into what didn’t have to be changed. “This file did not appear to need recovery.” That would at least tell us nothing was changed. Or a verbose description as to what was actually changed. Or even a take on whether the data resulting was worth recovering, how many blobs were lost and how many were rescued, etc. The engineers get a fair amount of info back from the recovery process and (apparently) we can request that it be put into a report, like the Import or Conversion Report.

Your constructive ideas to the engineers can go into the Help -> Send us your feedback selection in FMPA9.

I spent the better part of a week recovering a database with 2 million records. I didn’t compact or recover the original file. What I did was export 60,000 records at a time to an Xcel file. Then imported them into alphabetical FMP9 files. In the process I had numerous crashes but by importing the files alphabetically I only had to start over for the particular character. After I had all of the A-Z files I then imported them one at a time into a master file. Not a very pleasant experience, but it worked.

Hey Geoff and Jesse,

Just as a FYI, Thatcher’s presentation is available for download on the DevCon update site now. The link is in the DevCon binder and is under “Conference Information” of the General Information tab just in case you werent aware.

Good dialogue here… in our case we did not see any errors or corruption until updating from 8.5 to 9.0. Does this imply an error that was lurking but not seen (likely from a previous Server crash), or does this imply some issue with FMP 9?

We haven’t had any luck with compacting or recovering… the database is functioning as best we can tell on 8.5.

Since this same behavior (only indicating corruption when opening in 9.0) on several files, makes me wonder.

@bryan: The most likely explanation is that you do have some under-the-hood corruption that just has no visible impact. FileMaker 9 does something called a consistency check on the file when it is opened to look for corruption. It does this to help detect problems early before they’re likely to lead to major problems.

In your case, the consistency check is failing so 9 is complaining that the file is damaged, even though in 8, where no check is done, it runs “fine.”

In most cases, simply saving as a compacted version in 8 or 8.5, then re-opening in 9 will fix this problem. It sounds like that isn’t working in your case. You may need to try a more aggressive approach. Have you tried recovery (I know…bad word…try it anyway, at least to see if it gets the file open-able in 9). Also, sometimes it works to clone the file, then save the clone as a compacted copy, then re-import the data form the original. Do this all in 8 or 8.5, then try opening in 9.

Let us know what happens using the Contact Us button above.

I wonder why losing power to an idle FMP Server would cause corruption? I’m new to FMP, coming from 4th Dimension, and I never had file corruption problems in 4D in 15 years with apps running on 4D Server the entire time. 4D Server flushes data to disk on a regular basis, so unless the server is writing at the moment of failure, the files are always in good shape. FileMaker should adopt this strategy so that your files are only vulnerable during the moments when they are being written to.

Idle “anything” server:

When a disk becomes unpowered, it has a tendency to just drop what ever it had in the buffer/cache, to disk. It will drop it anywhere on the disk.

To counter this, you should have hardware configure properly. Essentially file system corruption.

This could really use an update for FM v10.

Michael:

I agree. Any takers? 🙂

Some day we’ll get back to writing. I miss it.

Geoff

if the File—-Save Copy procedure, Doesn’t works? Is There Any other chance to clean up a file fp7?

How can you save a compacted copy if the file is damaged and won’t open? As far as I can see you can’t.

Can you please email me the code for this script or please let know me in detail in relation to this script?munich shoes